Natalie Dennis of the Nat and Lo YouTube channel posted this Wednesday a new Google Blog entry explaining how style transfer works. Style transfer is the machine learning principle behind all those artsy photos we see in social media imitating famous paintings.

Nat and Lo are a couple of YouTubers dedicated to “demystifying the technology in our everyday lives.” The two content creators have exclusive access to Google, and they often post videos about the company’s different teams, projects, and products.

This time they have set out to find ‘What Does A.I. Have To Do With This Selfie?’ The selfie in question is a picture of Nat and Lo with a filter that makes it look like a work of art.

There are more elements at play than we can perceive with the naked eye, and they explain how our devices can achieve this effect.

How does an ordinary photo filter work?

Photo filters typically work in a much simpler way than the style transfer process. Platforms like Instagram and Google’s own Snapseed photo editor have a broad range of filters available that just change some aspects of the picture.

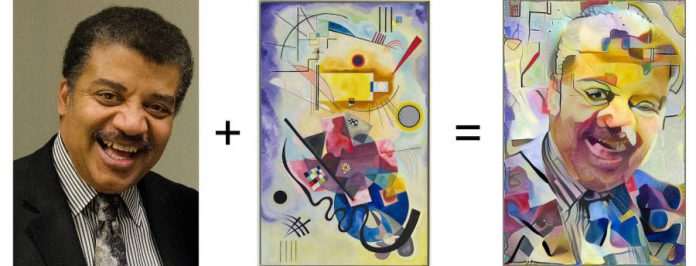

For example, an Instagram filter may alter the way your photo looks by applying a new color configuration or adding a different texture to the image. Those pictures that look like Kandinsky’s paintings rely on a much deeper principle.

What is style transfer and how does it work?

Style transfer is an image creation process that combines features of two images by using deep neural networks.

Let’s take the video’s picture for example. Taking that dog photo and passing it through a Van Gogh style transfer ‘filter’ will give you a new image of the dog as it was painted in the style of ‘Starry Night’ by the Dutch painter.

Our computers and devices can create these images thanks to the work of deep neural networks. These systems are based on our brains, and they are a fundamental part of machine learning and artificial intelligence.

Like our brains, the arrangement of neural networks is in layers. Each layer is dedicated to the detection of specific features. Once a picture has run through all the layers, the machine ‘knows’ how to rearrange the patterns it detected to match the desired style.

Apps that support style transfer filters take just seconds in performing the whole process, and they let users modify how much they want the picture to look like the set style.

This technology is not limited to photos for social media, and Google researchers are using it to work on videos, live streaming, 3D modeling, and more.

Source: Google Blog