Google researchers and software engineers Valentin Bazarevsky and Andrei Tkachenka, have been developing a more practical way to change backgrounds without the need of any harsh Photoshop or photo booth efforts for video creators and with no need of green screen at all since all will be completely suitable through an app on the user’s phone.

This technology proves to be directly created for video content creatives and is sure to be a game changer after its release, and developers are aiming to YouTube to be displayed in YouTube stories in order to test the software’s display on its first set of effects, on both selfies and videos.

According to Google’s Research Blog, the plan is to expand its employability “throughout Google’s broad augmented reality services” as the software and app are constantly improved and upgraded with the expansion of segmented technology. On another note, researchers stated that the app runs with an outstanding performance of 100+ FPS on iPhone 7 and 40+ FPS on Pixel 2.

A new tool for YouTube content creators. https://t.co/LHmnNPxoI7

— Mashable (@mashable) March 4, 2018

How background replacement works on YouTube (BETA):

Google researchers used a convolutional neural network architecture in order to develop a base for this video segmentation tool on its initial beta stage, which means that YouTube story users who have already tried this new software feature, are beta testers.

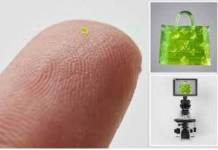

Instead of carefully picking out body features from the subject of interest like movie production requirements, Google AI researchers developed a trained neural network to recon every sign of eyes, glasses, mouth and basically any feature a person can possess when taking a picture in order to swap the background effortlessly, increasing the videos value, and with no need for special equipment.

No green screen required!https://t.co/v0d0W6DC9R

— CNET (@CNET) March 2, 2018

According to Digital Trends, this is how the recognition software functions:

“Once the software masks out the background on the first image, the program uses that same mask to predict the background in the next frame. When that next frame has only minor adjustments from the first…the program will make small adjustments to the mask. When the next frame is much different from the last …the software will discard that mask prediction entirely and create a new mask.”

However Beta developers have revealed that this software is far from being 100 percent ready, regardless of the speed AI provides for technology development. Researchers said that the neural network is designed to identify two eyes on the side of the nose, but when it comes to a person’s profile there may only be one eye visible to the camera, if not the recognition won’t work.

This breakthrough is certainly proof of the reliance developers can have in AI when it comes to solving computational problems.

Source: Google