On April 5, Google revealed the company had created a machine learning processor, and that it was the secret weapon of all its AI projects. Its name is the Tensor Processing Unit (TPU), and it made possible DeepMind’s victory over Lee Sedol, the Go master.

There is more. The custom chip has been working on the company’s data centers since 2014. It explains the exponential performance improvement of apps like Maps, RankBrain, Street View, and Google Images, to name a few.

Google first talked about the Tensor Processing Unit in May 2016. The TPU had been running for a year and a half by the time the organization decided to announce it. Impressively, the engineers only needed 22 days to complete the “machine learning” chip from a silicon prototype.

How fast is the “Machine Learning” processor?

The TPU is up to 30 times faster than the traditional CPUs when it comes to AI workloads. Not only that but it is also more energy efficient, and the code required to create neural networks based on the TPU is much simpler than other options.

It is important to understand the kind of challenge AI and Machine Learning represent is different from conventional software. If it was not for the TPU, the programmers from DeepMind could not have taught Alpha Go how to beat a Go master.

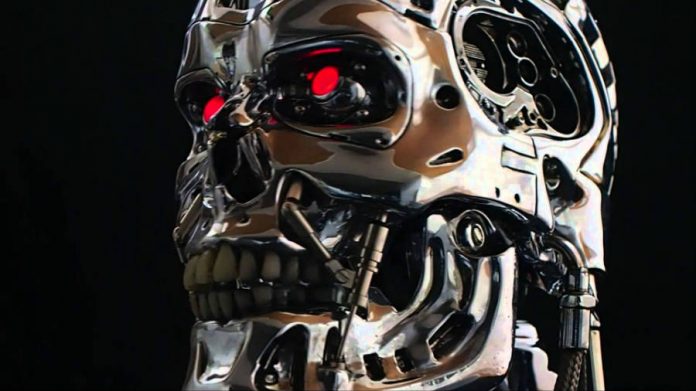

It literally means Google created a brain that AIs can use to evolve and become more like us, and even surpass us. It is the most terrifying and exciting case of hardware based on software.

Google promised to share it all with the world

The company has been very open about its AI research, and the TPU will not be the exception. Google has already published a study detailing the chip’s performance, and according to the announcing blog post, it will continue sharing the details on the custom processor.

So, it should be safe to say Neural Interfaces will grow exponentially over the next decade after teams from all around the world start working on their projects based on the Tensor Processing Units, or even a better version of the processor.

Machines have been becoming smart. Too smart for our own safety, according to Elon Musk. In fact, he recently registered Neuralink, and the startup final goal is to create a human-computer neural lace so that our kind can keep the pace with AIs.

This topic has unraveled in sci-fi research with even more incredible results, and I fear people are not really paying attention to how fast computers are becoming more like us. Moreover, researchers do not want to slow down regardless how many people warns them about the risks of their work.

Source: Google