Researchers working with Google’s DeepMind project have revealed that Artificial Intelligence tends to recur to ‘aggressive strategies‘ whenever it detects an unfavorable outcome.

This development is the latest for the company’s important AI project, which beat a world champion of Go, a very challenging Korean board game last year. DeepMind’s victory showed its ability to learn and improve from past events.

DeepMind is also in the process of learning how to mimic a human voice in a way that does not sound overly robotic like most virtual assistants do today. This process is the work of another related project by Google called WaveNet.

DeepMind does not like to lose

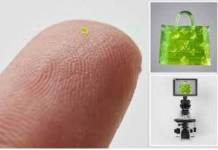

Google engineers put two DeepMind ‘agents,’ which are individual iterations of the same AI, to compete against each other in a very fundamental ‘fruit gathering’ game, seen in the video above.

The red and blue dots represent DeepMind1 and DeepMind 2, which try to collect the green dots, known as ‘apples.’ Apparently, the agents do not mind each other when there are plenty of apples in the field.

However, once the apples start to go scarce, both AIs start to fight each other using laser beams, which are the yellow flashes that appear later. The lasers knock one agent out for a few seconds so the other can gather more apples.

Earlier DeepMind agents, according to online sources, had already played this game. However, they chose not to use the laser beams, which resulted in both of them having the same amount of apples at the end of the hunt.

Robots can learn “bad” things too

In a comical twist, DeepMind’s aggressive behavior has led some news outlets to compare it with Skynet, the artificial intelligence featured in the Terminator movies.

Joel Z Leibo, who co-authored a recent paper on the AI project, stated that “this kind of research may help to better understand and control the behavior of complex multi-agent systems such as the economy, traffic, and environmental challenges.”

He also added that “some aspects of human-like behavior emerge as a product of the environment and learning,” directly referring to DeepMind’s competitive behavior.

Will future AI serve humanity or its ‘interests’?

DeepMind also participated in a different game called ‘Wolfpack’ where two agents acted as ‘wolves’ and another as the ‘prey.’ This hunt intended to research the way DeepMind deals with cooperation and teamwork.

In that particular case, the wolf agents cooperated when they ‘realized’ that such a strategy led to a better outcome. This result might seem favorable but poses a greater question. Faced with real-life problems, will AI help itself or us?

Source: Google